Weather nowcasting is a sub-field of meteorology that focuses on hyper-local forecasting at short lead-times, typically up to six hours. As for meteorology in general, nowcasting benefits from machine learning techniques, which are able to learn complex patterns from data and make predictions in a fast and efficient way.

We leveraged our open-source software Flowdapt to explore the potential of machine learning for nowcasting. Our scientific exploration on this topic was released in 2023, with full details available here.

Completed experiments

The objective of the present experiment is to compare the performance of the four different model architectures

- XGBoost decision tree ensamble, fully connected PyTorch ANN, PyTorch Transformer, PyTorch LSTM-

for predicting three weather variables

- temperature, cloud cover, wind speed -

at six time horizons

- 1hr, 2hr, 3hr, 4hr, 5hr, 6hr -

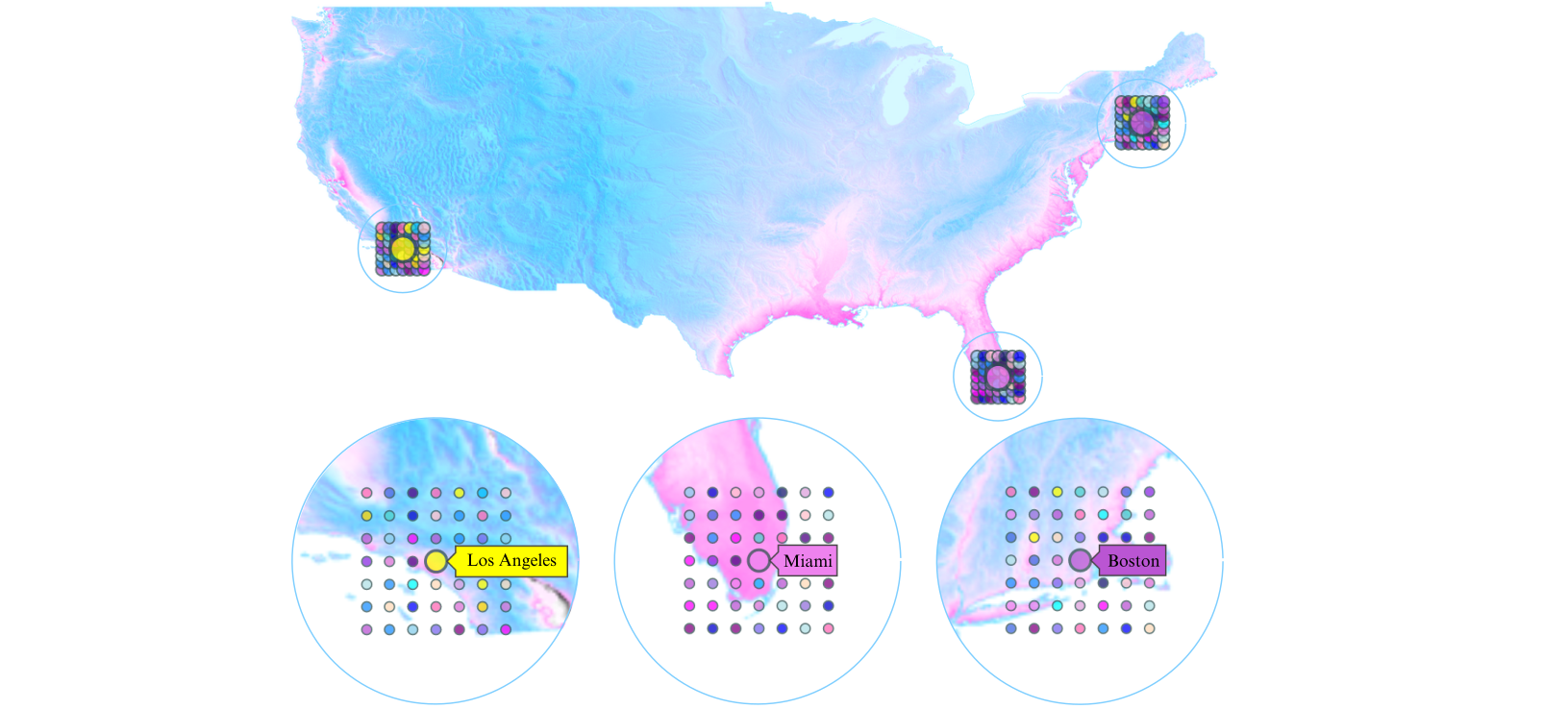

for three cities in the USA

- Los Angeles, Miami, Boston -

Each model architecture has four different configurations:

- Hourly retraining on 140 days of historical data -

- Hourly retraining on an amount of historical data adaptively determined using the Variance Horizon -

- Retraining every X hours, determined using Concept Drift Detection, on 140 days of historical data -

- Retraining every X hours, determined using Concept Drift Detection, on an amount of historical data adaptively determined using the Variance Horizon -

The forecasts are based on the weather data for a 300x300 km grid surrounding each city, from the Openmeteo open-source weather API and are compared to the forecasts from the NOAA GFS.

Below are screenshots from the dashboard reporting live results during the experiment.

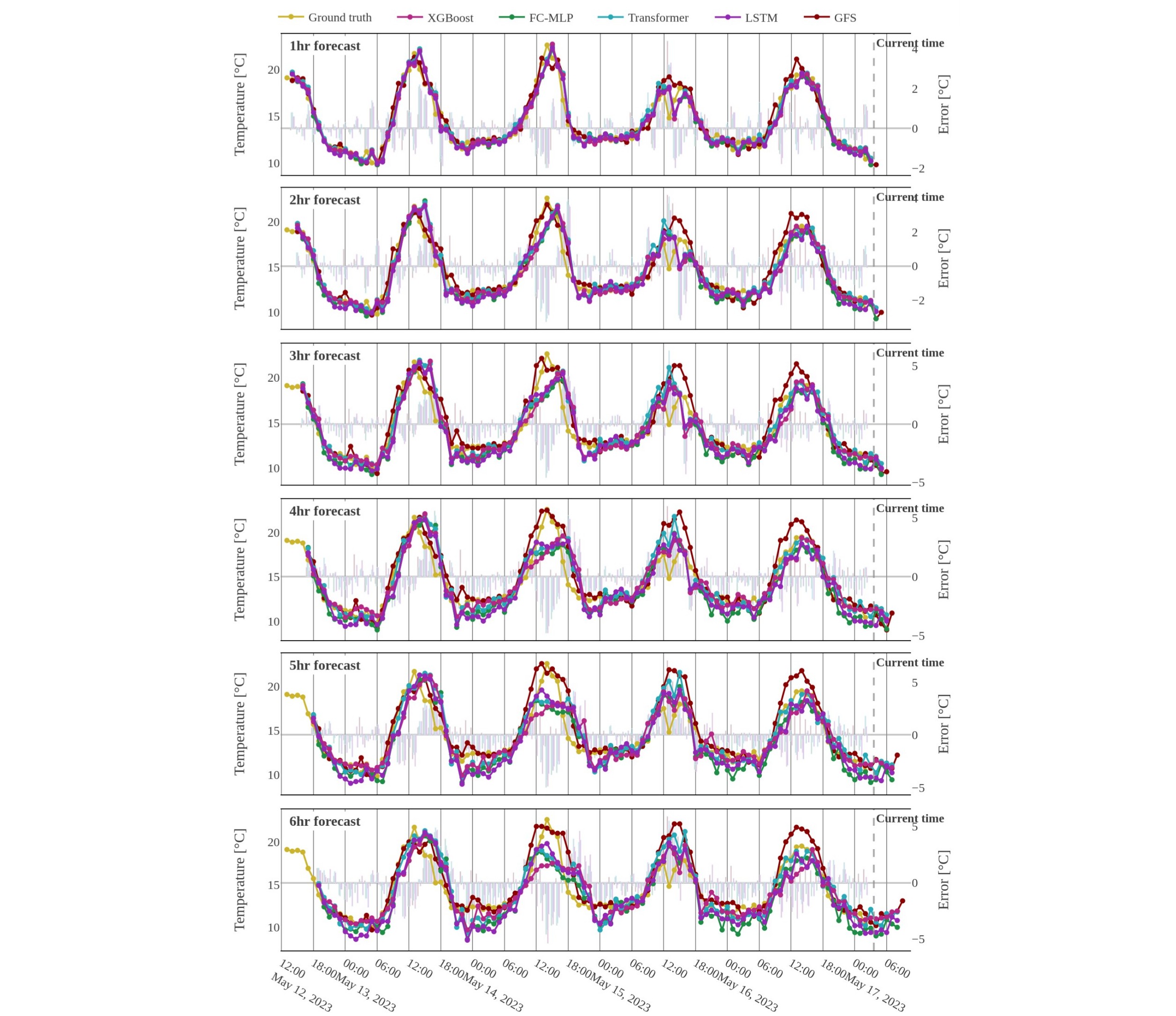

Los Angeles temperature forecasts for hourly retraining on 140 hours of historical data

Forecast locations and grid placement

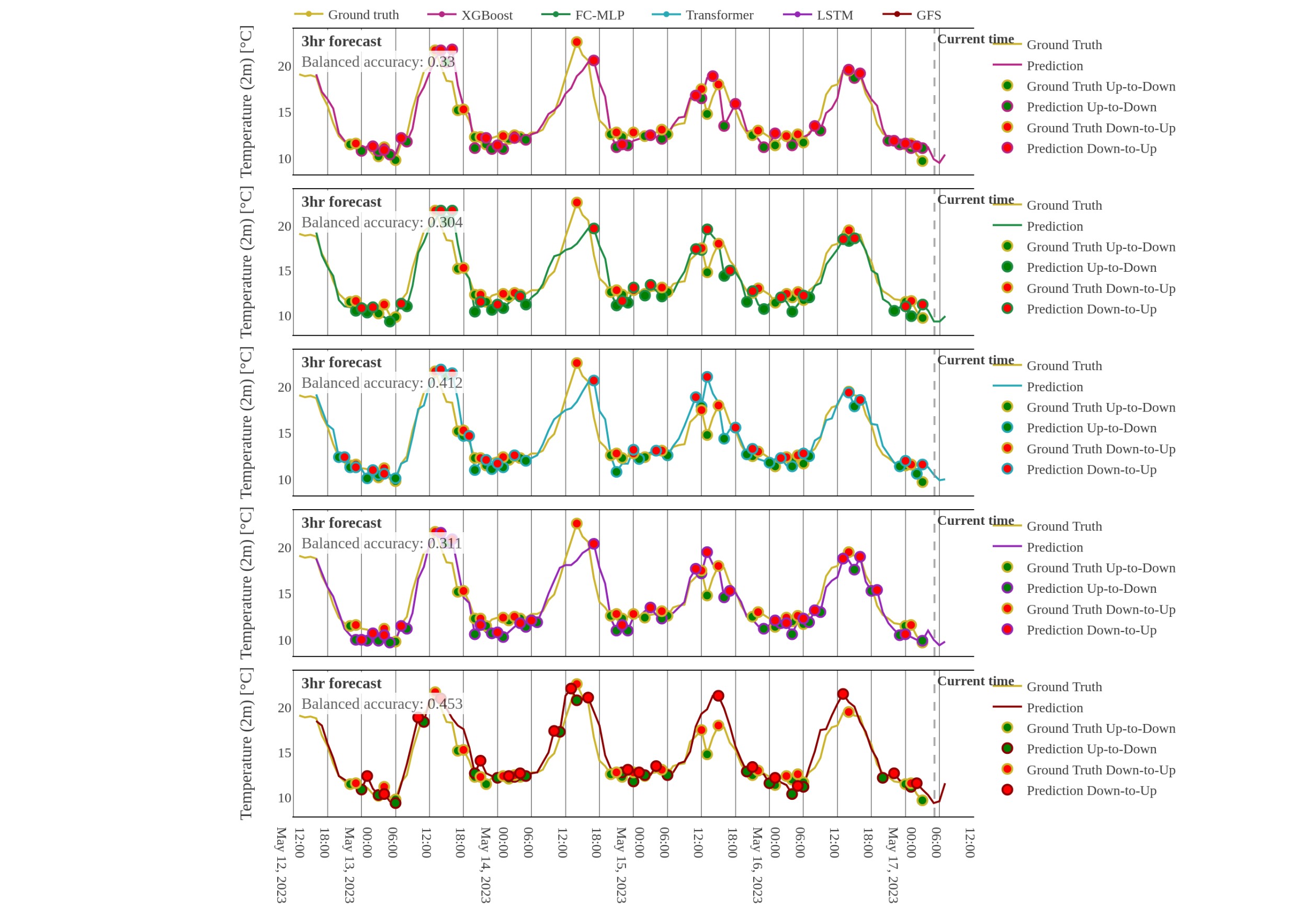

Reversal accuracy for the Los Angeles temperature forecasts